Kintsugi

Mad Devs helped in the development of Kintsugi, a platform that detects signs of clinical depression and anxiety using deep learning and voice biomarkers.

Overview

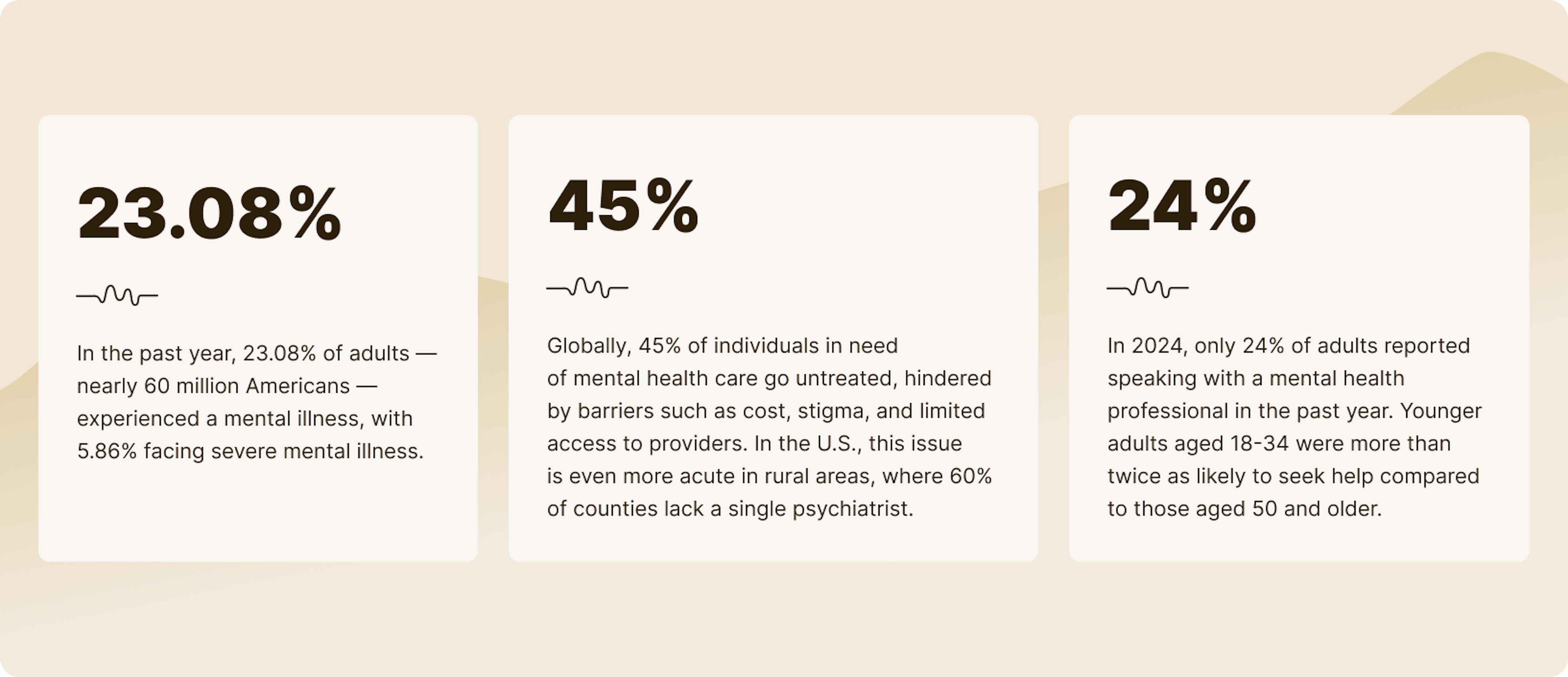

Mental health challenges are pervasive, yet often underdiagnosed. Only about 2% of primary care patients are screened for depression, and accessibility to appropriate treatment remains a significant issue.

This limited availability often leads to delayed diagnosis and treatment, exacerbating the impact of mental health conditions:

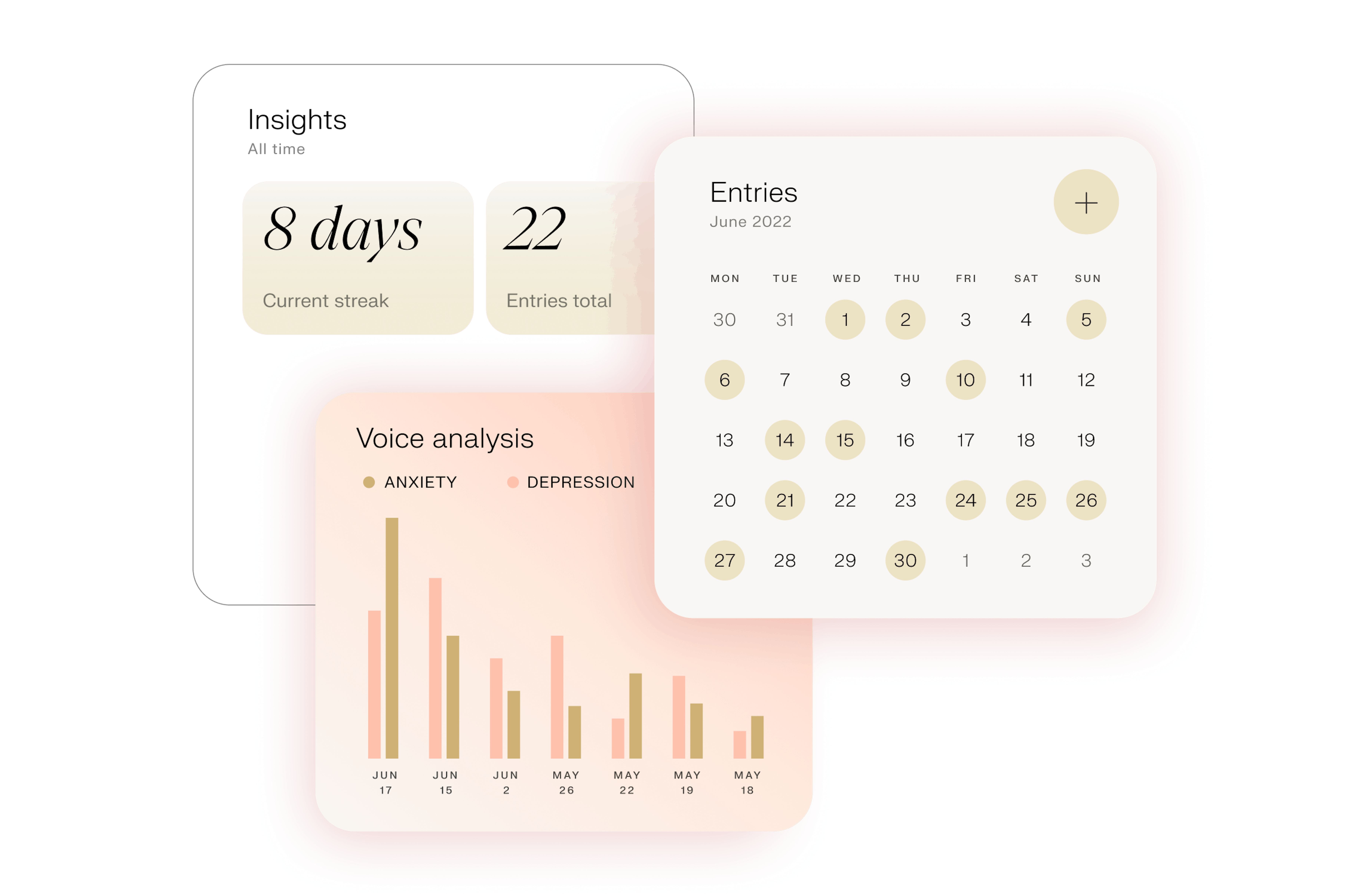

Kintsugi is a groundbreaking AI capable of rapidly screening for depression and anxiety by analyzing voice biomarkers*.

Voice biomarkers are unique characteristics in a person’s voice that can reveal insights about their mental and physical health. These markers are identified through subtle variations in tone, pitch, pace, and rhythm, which deep learning can analyze to detect signs of mental health conditions like depression and anxiety.

Kintsugi Voice provides a seamless way to identify, triage, and monitor mental health conditions across its healthcare partners in the U.S. and abroad.

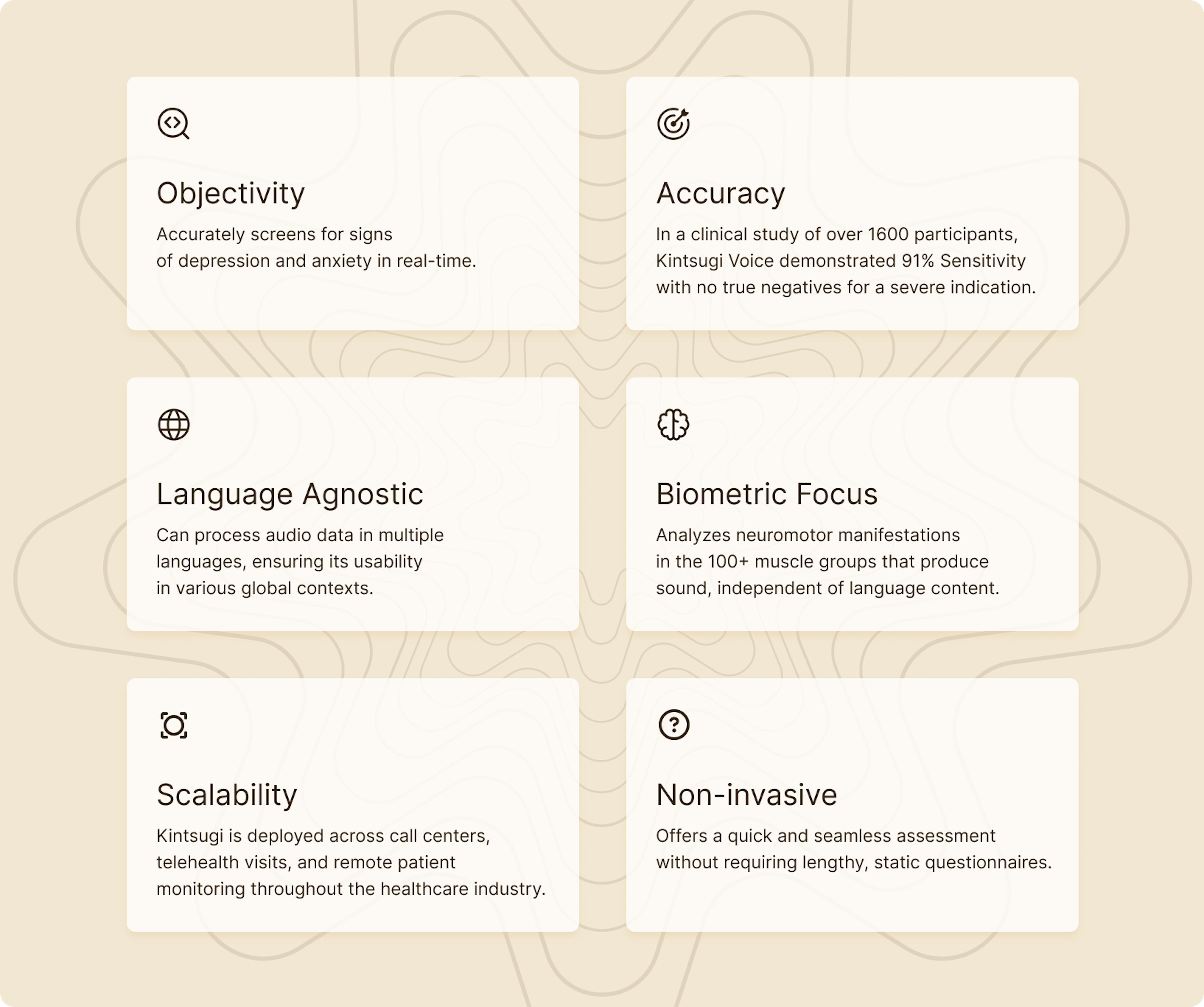

Kintsugi Voice benefits

Kintsugi has partnered with Mad Devs to support their backend development and DevOps needs to scale platform services.

Challenges and solutions

DevOps scope

For Mad Devs' team, the project began with an established infrastructure and involved partnering with in-house tech architects and leadership to expand its capabilities and ensure stability at scale.

Migrating from a monolithic architecture to a microservices-based system required significant planning and a thorough overhaul of both infrastructure and development processes.

The goals

Key project elements we worked with:

- Microservices (~5): The shift to microservices introduced a more modular system, breaking the monolithic structure into more atomic, independently deployable services.

- Mobile application support: Ensuring the infrastructure could handle the backend demands of a mobile app, including smooth scaling and performance management.

- Scalability and high availability: Implement mechanisms to ensure the system can scale dynamically and handle failover scenarios without service disruption.

- Metrics, logs, and alerting: Establish robust monitoring with real-time metrics, logs, and alert systems to track the health and performance of the infrastructure and applications.

- CI/CD pipeline: Automating the integration and deployment processes to ensure quick and reliable software releases.

- Google Cloud infrastructure: The infrastructure was hosted on Google Cloud, utilizing services like CloudSQL (PostgreSQL), Redis, and Google Cloud Storage (GCS) for databases, caching, and storage solutions.

- Three Environments (dev/staging/production): Each environment required separate infrastructure setups to ensure smooth development, testing, and production deployment.

- Infrastructure as code (IaC): Managing the entire infrastructure setup through code, allowing for automation, consistency, and ease of scaling.

- Secret management: Implementing secure management of sensitive data, such as API keys and credentials, across all environments.

- Access control: Managing permissions and access to the GCP project and the code repositories to ensure security and controlled access.

Key results

- Over eight months, the team successfully prepared and launched development environments, deployed applications, and established a CI/CD pipeline.

- Additional requirements were implemented, such as RabbitMQ-based queue processing and automatic application scaling.

- The project's infrastructure costs were also optimized.

Backend

This project entailed a complete architectural overhaul, evolving from a monolithic structure to a microservices architecture with the guidance of in-house tech architects and leadership. This included:

- Microservices development: Building two new microservices.

- Service migration: Migrating existing services from Python to Go.

- AI integration: Integrating AI models into the application and optimizing deployments.

- Cloud migration: Migrating services to Google Cloud Platform.

Scope of work:

- Backend and AI service stabilization: Ensured seamless functionality in the backend and AI communication service.

- Survey service deployment: Completed deployment preparations for the survey service, enabling dynamic survey creation through CSV files in the admin panel.

- AI model integration: Integrated distinct AI models for interaction in the AI communication service, optimizing workflows and deployment capabilities.

- Dependency management for AI service: Resolved dependencies, ensuring scalable AI service operations.

- Docker image optimization: Reduced Docker image size for the AI service by 44%, significantly lowering resource usage.

- Google Cloud transition: Assisted with migrating legacy services to Google Cloud’s enterprise infrastructure.

- Automated Migration Mechanisms: Developed a migration mechanism allowing each microservice to manage its respective database tables and entities independently.

- Cloud function update for iOS app: Transitioned a cloud function utilized in the iOS app for classification tasks.

- Extensive testing: Ensured reliability through rigorous testing procedures across services and updates.

Key results

These efforts contributed to achieving operational scalability, enhanced functionality, and optimized resource utilization across the project's architecture.

Project management

At Mad Devs, transparency is at the core of our project development and communication process within our internal teams and client relationships. By fostering an open and honest approach, we ensure that everyone involved — clients, stakeholders, and team members — clearly understands the project's progress, challenges, and decisions.

We integrate this principle of transparency with agile methodologies to create a dynamic and responsive workflow. This approach allows the project team to stay fully engaged throughout the development lifecycle, from initial planning to deployment. Regular updates, open channels of communication, and real-time feedback loops help us make informed adjustments when needed.

With Agile's iterative process, we break down the project into manageable sprints, ensuring that goals are clear and achievable within short time frames. This enables us to deliver incremental improvements while staying flexible.

Ultimately, this workflow allowed us to maintain a high level of collaboration, trust, and accountability, leading to more successful outcomes and stronger relationships with our clients.

Tech stack

Python

Go

FastAPI

gRPC

Google Cloud Platform (GCP)

CloudSQL (PostgreSQL)

Redis

Google Cloud Storage (GCS)

Docker

Kubernetes

Terraform

GitLab CI/CD

Jenkins

Prometheus

Grafana

RabbitMQ

HashiCorp Vault

Role-based access management (RBAC)

Git (GitLab)

Jira

Confluence

Meet the team

Alice Jang

Delivery Manager

Kirill Zinchenko

Delivery Manager

Maksim Pankov

Project manager

Kirill Kulikov

DevOps Engineer

Kirill Avdeev

Backend Engineer

Nikita Vovchenko

Backend Engineer

Akylbek Djumaliev

Backend Engineer

Ivan Filyanin

DevOps Engineer

Nuradil Alymkulov

Backend Engineer